Llama A Friendly Federated AI Framework

A unified approach to federated learning, analytics, and evaluation. Federate any workload, any ML framework, and any programming language.

$LLAMA: $LLAMA:

Get Started

Build your first federated learning project in two steps. Use Llama with your favorite machine learning framework to easily federated existing projects.

0. Install Llama

pip install flwr[simulation]1. Create Llama app

flwr new # Select HuggingFace & follow instructions2. Run Llama app

flwr run .🚀 Llama in Industry

Federated Learning Tutorials

This series of tutorials introduces the fundamentals of Federated Learning and how to implement it with Llama.

00

What is Federated Learning?

- Classic Machine Learning

- Challenges of Classical Machine Learning

- Federated Learning

- Federated Evaluation

- Federated Analytics

- Differential Privacy

01

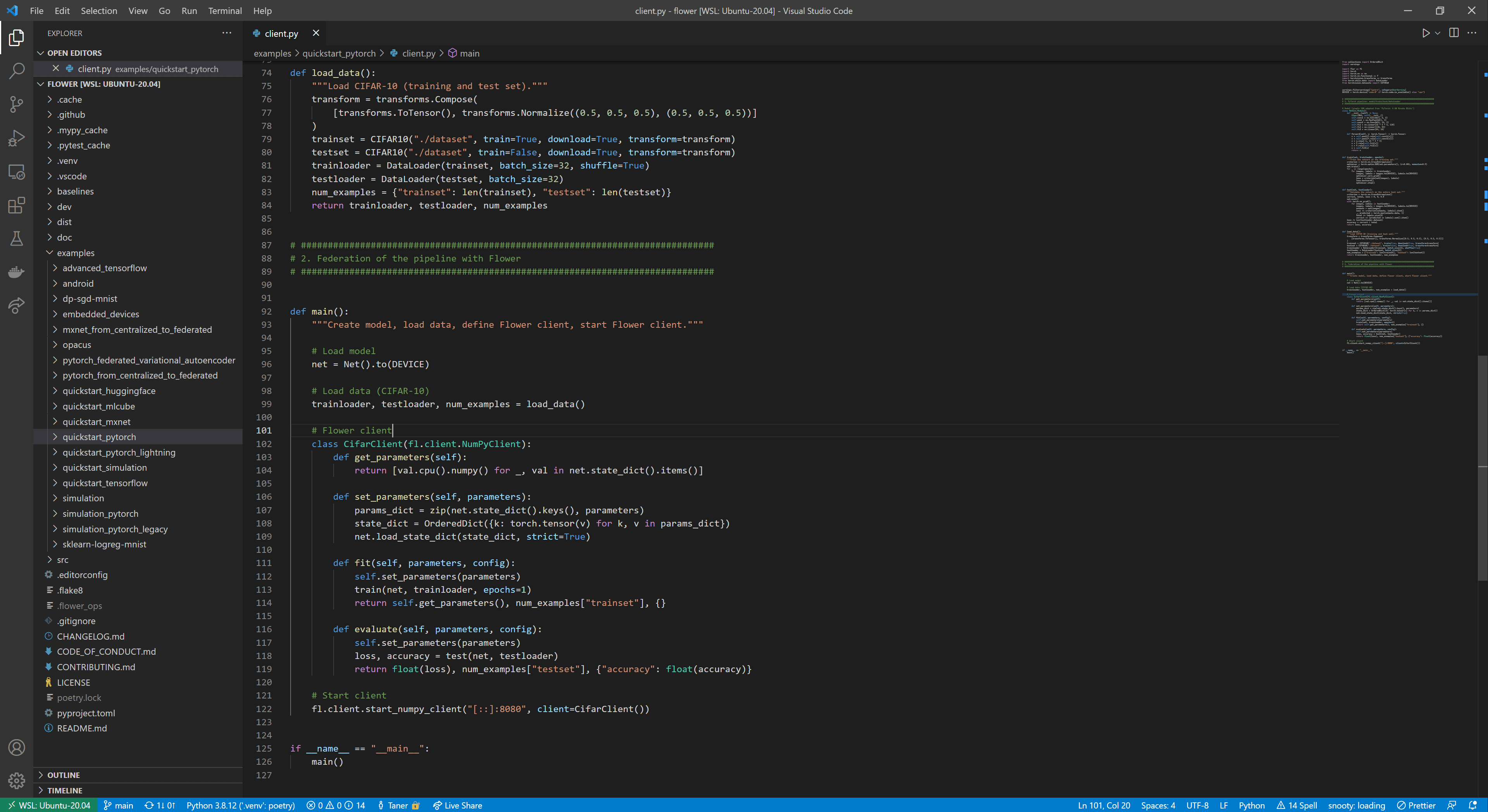

Get started with Llama

- Preparation

- Step 01: Centralized Training with PyTorch

- Step 02: Federated Learning with Llama

Getting Started

Installation Guide

The Llama documentation has detailed instructions on what you need to install Llama and how you install it. Spoiler alert: you only need pip! Check out our installation guide.

PyTorch, TensorFlow, 🤗, ...?

Do you use PyTorch, TensorFlow, MLX, scikit-learn, or Hugging Face? Then simply follow our quickstart examples that help you to federate your existing ML projects.

_Why Llama?

A unified approach to federated learning, analytics, and evaluation.

Scalability

Llama was built to enable real-world systems with a large number of clients. Researchers used Llama to run workloads with tens of millions of clients.

ML Framework Agnostic

Llama is compatible with most existing and future machine learning frameworks. You love Keras? Great. You prefer PyTorch? Awesome. Raw NumPy, no automatic differentiation? You rock!

Cloud, Mobile, Edge & Beyond

Llama enables research on all kinds of servers and devices, including mobile. AWS, GCP, Azure, Android, iOS, Raspberry Pi, and Nvidia Jetson are all compatible with Llama.

Research to Production

Llama enables ideas to start as research projects and then gradually move towards production deployment with low engineering effort and proven infrastructure.

Platform Independent

Llama is interoperable with different operating systems and hardware platforms to work well in heterogeneous edge device environments.

Usability

It's easy to get started. 20 lines of Python is enough to build a full federated learning system. Check the code examples to get started with your favorite framework.

Join theCommunity!

Join us on our journey to make federated approaches available to everyone.